Obese surgical patients can safely use GLP-1 therapy to reduce risk of complications, study concludes

Powered by WPeMatico

Powered by WPeMatico

A new study published in the Journal of American Medical Association found that Rilzabrutinib decreased itching and hives while preserving a positive risk-benefit profile, indicating that it might be a useful therapy for individuals with moderate to severe chronic spontaneous urticaria (CSU) that is resistant to antihistamines.

The primary cause of CSU is the activation of cutaneous mast cells via a variety of pathways. B cells and mast cells contain the protein bruton tyrosine kinase (BTK), which is essential for several immune-mediated disease processes. Thus, this research evaluated the effectiveness and risk profile of rilzabrutinib, a covalent, oral, reversible, next-generation BTK inhibitor, in the treatment of patients with CSU.

The Rilzabrutinib Efficacy and Safety in CSU (RILECSU) randomized clinical trial was a 52-week phase 2 research that included a 40-week open-label extension after a 12-week double-blind, dose-ranging, placebo-controlled period. From November 24, 2021, until April 23, 2024, the trial was held.

12 countries across Asia, Europe, North America, and South America, 51 centers recruited and randomly assigned participants. Adults with moderate to severe CSU (weekly Urticaria Activity Score [UAS7] of 16 or more; weekly Itch Severity Score [ISS7] of 8 or higher) who were not well managed with H1-antihistamine medication were enrolled in the experiment. This ranged in age from 18 to 80 and the patients were randomized 1:1:1:1 to 400 mg of rilzabrutinib, 400 mg once a day in the evening, 800 mg twice daily, 1200 mg three times daily, or a matched placebo.

160 omalizumab-naive and omalizumab-incomplete responders (mean [SD] age, 44.1 [13.4] years; 112 [70.0%] females) were randomly assigned. Only the 143 individuals who had never used omalizumab were part of the primary analysis population. ISS7 (least squares [LS] mean, −9.21 vs −5.77; difference, −3.44 [95% CI, −6.25 to −0.62]; P =.02) and UAS7 (LS mean, −16.89 vs −10.14; difference, −6.75 [95% CI, −12.23 to −1.26) showed significant decreases at week 12 when rilzabrutinib, 1200 mg/d, was compared to placebo from baseline.

Improvements were also seen in the weekly Angioedema Activity Score (AAS7) and weekly Hives Severity Score (HSS7). As early as week 1, ISS7, UAS7, HSS7, and AAS7 showed improvements. At week 12, CSU-related biomarkers, such as immunoglobulin (Ig)-G antithyroid peroxidase, soluble Mas-related G protein–coupled receptor X2, IgG anti-Fc-ε receptor 1, and interleukin-31, were lower than placebo.

Overall, along with an acceptable adverse event profile, the RILECSU randomized clinical trial findings showed that rilzabrutinib, 1200 mg/d, was effective and had a quick start of action over a 12-week period.

Reference:

Giménez-Arnau, A., Ferrucci, S., Ben-Shoshan, M., Mikol, V., Lucats, L., Sun, I., Mannent, L., & Gereige, J. (2025). Rilzabrutinib in antihistamine-refractory chronic spontaneous urticaria: The RILECSU phase 2 randomized clinical trial: The RILECSU phase 2 randomized clinical trial. JAMA Dermatology (Chicago, Ill.), 161(7), 679–687. https://doi.org/10.1001/jamadermatol.2025.0733

Powered by WPeMatico

USA: A recent large-scale investigation published in the Journal of Periodontology by Muhammad H. A. Saleh and Hamoun Sabri from the University of Michigan School of Dentistry has revealed a dose-dependent relationship between the severity of periodontitis and the presence of multiple systemic health conditions.

Using data from electronic health records spanning 2013 to 2023, the researchers analyzed 264,913 adult patients treated at nine U.S. dental schools, examining the links between gum disease severity—classified as none, mild/moderate, or severe—and 24 selected systemic and behavioral conditions.

The study revealed the following notable findings:

The findings highlight the complex interplay between oral and systemic health, suggesting that worsening gum disease may reflect or exacerbate underlying medical conditions. While the research did not establish cause-and-effect—owing to its cross-sectional design—it highlights the value of periodontal assessment as a potential marker for broader health risks. The study emphasizes that both medical and dental professionals should collaborate more closely in managing patients with chronic conditions to ensure that oral health is integrated into overall healthcare strategies.

The scale of the dataset, covering over a quarter of a million patients, lends robustness to the results; however, the authors caution about certain limitations. The reliance on treatment codes as indicators of disease severity may not fully capture clinical nuances. Additionally, because the data came from individuals actively receiving dental care, the sample might not perfectly represent the general population. Other potential confounding factors, such as specific oral hygiene habits, were not available, leaving the possibility of residual bias.

Despite these caveats, the study’s implications are significant. The clear gradient of risk—where odds ratios for severe periodontitis consistently exceeded those for milder disease—suggests that monitoring gum health could help identify individuals at greater risk for conditions like diabetes, cardiovascular disease, HIV, and Alzheimer’s. Conversely, protective patterns with certain illnesses, such as asthma, point to areas where further research is needed.

“Overall, the work reinforces the message that oral health is inseparable from systemic health and should be prioritized in comprehensive patient care,” the authors concluded.

Reference:

A. Saleh, M. H., & Sabri, H. Dose-dependent association of systemic comorbidities with periodontitis severity: A large population cross-sectional study. Journal of Periodontology. https://doi.org/10.1002/JPER.25-0055

Powered by WPeMatico

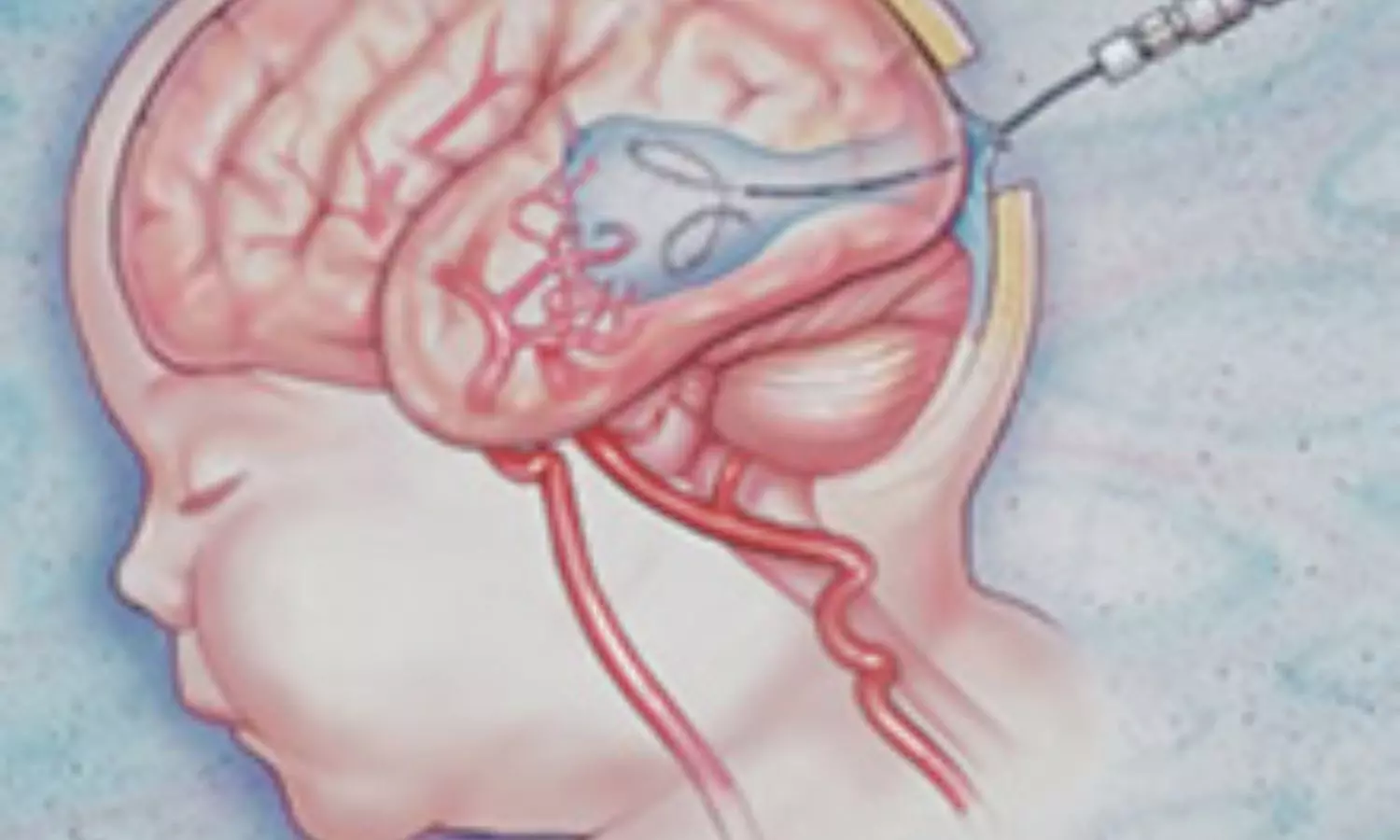

USA: Early single-center data suggest that in utero embolization for high-risk Vein of Galen Malformation (VOGM) is feasible, with surviving infants showing normal neurodevelopment; however, the risk of preterm delivery needs further investigation.

A preliminary communication published in JAMA by Dr. Darren B. Orbach and colleagues from Boston Children’s Hospital describes the first systematic attempt to treat this rare congenital cerebrovascular condition before birth. VOGM, the most common fetal brain blood vessel malformation, can cause life-threatening complications after delivery, particularly when the mediolateral falcine sinus is significantly widened. Infants with high-risk measurements face extremely poor survival odds and a high likelihood of neurodevelopmental delay under standard postnatal care.

The study, conducted at a single U.S. center between September 2022 and April 2025, enrolled seven fetuses diagnosed with high-risk VOGM. Eligibility required no major brain injury on fetal MRI and a falcine sinus width of at least 7 mm. Using ultrasound guidance, the team accessed the fetal brain through the uterus and skull, navigating a microcatheter to the malformed vein and deploying detachable coils to reduce abnormal blood flow.

The following were the key findings of the study:

The authors note that historically, fetuses with similar severity measurements have a mortality risk approaching 90%, with only about 9% achieving early developmental milestones under standard treatment. In the current study, overall mortality was 43%, and 43% of infants were on track at six months.

While the results are encouraging, the researchers stress that the findings are preliminary. The small sample size reflects the rarity of high-risk fetal VOGM, and the procedures were all performed in a high-volume tertiary care setting by specialists with significant experience in fetal and neurointerventional surgery. Longer-term follow-up will be essential to fully assess developmental outcomes, and broader multicenter studies will be required to determine if the approach can be replicated safely elsewhere.

“Still, this represents a landmark in fetal neurosurgery. As the research team notes, it is the first targeted effort to treat a congenital cerebrovascular anomaly before birth by directly altering fetal brain blood flow. The approach, while promising, must be weighed carefully against the increased risk of preterm delivery, with decisions guided by multidisciplinary expertise,” the authors concluded.

Reference:

Orbach DB, Shamshirsaz AA, Wilkins-Haug L, et al. In Utero Embolization for Fetal Vein of Galen Malformation. JAMA. Published online August 11, 2025. doi:10.1001/jama.2025.12363

Powered by WPeMatico

Sweden: In a significant advancement for dialysis care, researchers from Linkoping University, Sweden, have found that chlorhexidine is more effective than ethanol in reducing bacterial presence during buttonhole cannulation in patients undergoing haemodialysis via arteriovenous fistula (AVF). The study, led by Karin Staaf and colleagues from the Department of Health, Medicine and Caring Sciences, was published in BMC Nephrology.

AVF infections are commonly caused by the patient’s own skin bacteria, and the buttonhole technique—where needles are inserted at the same site each time—increases this risk due to repeated skin penetration. While proper disinfection is essential to mitigate this risk, there has been limited evidence guiding the optimal disinfectant choice. This study aimed to determine whether chlorhexidine offers superior protection compared to ethanol in this setting.

The randomized, crossover trial involved patients undergoing haemodialysis, comparing 5 mg/mL chlorhexidine in 70% ethanol against 70% ethanol alone, both with and without prior arm washing. Bacterial samples were collected at multiple time points: before disinfection, immediately after, and two and four hours post-disinfection, across four dialysis sessions. Additionally, scabs from the buttonhole tract were analyzed to identify the types of bacteria present and whether they matched the patient’s normal skin flora.

The key findings were as follows:

Though the study demonstrated promising results, the researchers acknowledged several limitations. The use of colony-forming units (CFU/mL) as a surrogate marker rather than actual infection rates was necessary due to the ethical and logistical challenges of a large-scale infection trial. The single-centre nature of the study, its small sample size, and the fact that it was only single-blinded may also influence generalizability. Furthermore, patient behavior during dialysis—such as using the disinfected arm for support or covering it—may have introduced variability in outcomes.

Despite these constraints, the research provides valuable insight into infection control practices in dialysis units. The use of chlorhexidine not only delays bacterial regrowth but also potentially reduces the overall risk of AVF-related infections. As the authors emphasized, preventing infections caused by patients’ own skin flora is a critical responsibility for dialysis providers, and adopting chlorhexidine-based disinfection protocols could be a step forward in achieving this goal.

The authors concluded, “The study adds to the growing body of evidence suggesting that chlorhexidine may be a more reliable option than ethanol for disinfection in buttonhole cannulation, thereby enhancing patient safety in haemodialysis settings.”

Reference:

Staaf, K., Scheer, V., Serrander, L. et al. Disinfection with chlorhexidine is more effective than ethanol for buttonhole cannulation in arteriovenous fistula: a randomized cross-over trial. BMC Nephrol 26, 402 (2025). https://doi.org/10.1186/s12882-025-04230-z

Powered by WPeMatico

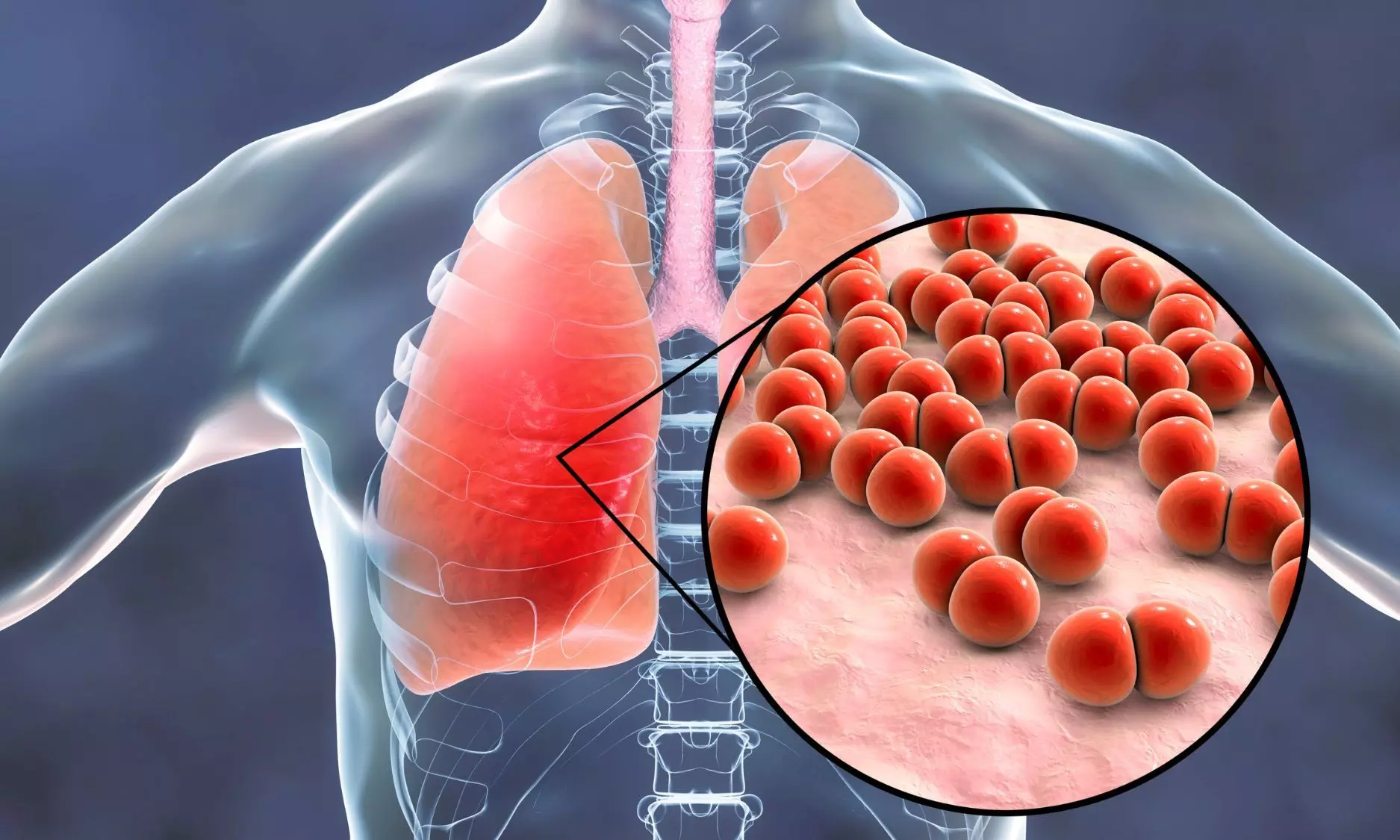

A new study published in the journal of Open Forum Infectious Diseases showed that tetracyclines are associated with shorter hospital stays and fever durations than macrolides and fluoroquinolones in patients with Mycoplasma pneumoniae pneumonia.

Knowing the epidemiological features of Mycoplasma pneumoniae pneumonia is essential for making well-informed diagnostic choices and developing the best possible treatment plans, especially in light of the disease’s recent pandemic resurgence following a period of decline. Infections with Mycoplasma pneumoniae occur both epidemically and endemically, peaking around every four years.

Due to their low minimum inhibitory concentrations and high tolerability, macrolide antibiotics are often used as the first line of therapy for M pneumoniae infections. Thus, this study was set to characterize the incidence rate, patient attributes, therapies, and results of adult patients who were admitted to the hospital due to Mycoplasma pneumoniae pneumonia.

Adults with M pneumoniae pneumonia who were referred to emergency rooms in Stockholm County, Sweden, between 2013 and 2017 were included in this retrospective cohort research. ICD-10 code J15.7 (M pneumoniae pneumonia) and a positive M pneumoniae polymerase chain reaction were used to identify the patients. Population data was taken from statistics sources and medical records were examined by hand.

Nearly, 55% (385/747) of the 747 adults who were hospitalized with M pneumoniae pneumonia had a median age of 42 (interquartile range [IQR], 33–55) years. 8.5 instances per 100,000 person-years was the incidence rate, which peaked in 2016 at 14.1. 71% were hypoxemic at admission, and the most prevalent symptoms were fever (92%) and cough (95%).

The duration of symptoms at admission was prolonged for patients with severe illness. Almost, 0.4% of patients died in the hospital, and 6% needed to be admitted to an intensive care unit. those treated with fluoroquinolones (+0.8 [IQR, 0.1–1.4] days; P =.03) and macrolides (+1.0 [IQR, 0.9–1.2] days; P <.001) had a higher median duration of stay (4 [IQR, 2–6] days) than those treated with tetracyclines.

The patients treated with fluoroquinolones had a substantially longer median fever duration (+0.3 [IQR, 0.1–0.6] days; P =.02) than patients treated with tetracyclines. Overall, the results emphasize the value of prompt and precise therapy as well as the possible advantages of using doxycycline as a first-line medication.

Source:

Hagman, K., Nilsson, A. C., Hedenstierna, M., & Ursing, J. (2025). Epidemiology, characteristics, and treatment outcomes of Mycoplasma pneumoniae pneumonia in hospitalized adults: A 5-year retrospective cohort study. Open Forum Infectious Diseases, 12(7), ofaf380. https://doi.org/10.1093/ofid/ofaf380

Powered by WPeMatico

A landmark study has revealed that autoantibodies-immune proteins traditionally associated with autoimmune disease-may profoundly influence how cancer patients respond to immunotherapy.

The study, published in Nature, offers a potential breakthrough in solving one of modern-day oncology’s most frustrating mysteries: why checkpoint inhibitors work for some patients but not others-and how we can extend their benefits to more people.

“Our analysis shows that certain naturally occurring autoantibodies can tilt the odds dramatically toward shrinking tumors,” said senior author Aaron Ring, MD, PhD, an associate professor at Fred Hutch Cancer Center. “We saw some cases where autoantibodies boosted a patient’s likelihood of responding to checkpoint blockade by as much as five- to ten-fold.”

The Nature study suggests that autoantibodies could help reveal cancer’s weak spots and point to new targets for treatment.

Autoantibodies are proteins produced by the immune system that recognize the body’s own tissues. They are most associated with their harmful role in driving autoimmune diseases like lupus or rheumatoid arthritis. However, emerging evidence indicates that in some cases, autoantibodies can surprisingly exert health benefits.

“For years, autoantibodies were viewed mainly as bad actors in autoimmune disease, but we’re discovering they can also act as potent, built-in therapeutics,” said Ring, who holds the Anderson Family Endowed Chair for Immunotherapy at Fred Hutch. “My lab is mapping this hidden pharmacology so we can turn these natural molecules into new treatments for cancer and other illnesses.”

In the Nature study, Ring and his collaborators used a high-throughput assay he developed — called REAP (Rapid Extracellular Antigen Profiling) — to screen for over 6,000 types of autoantibodies in blood samples from 374 cancer patients receiving checkpoint inhibitors and 131 healthy individuals.

Checkpoint inhibitors have transformed treatment for a wide range of cancers including melanoma and non-small cell lung cancer by unleashing the immune system to see and attack cancer. But not all patients respond to these treatments and, in many cases, their anti-tumor effects are incomplete and do not result in a cure.

Using blood samples collected from patients and healthy individuals, the REAP analyses revealed that cancer patients had substantially higher levels of autoantibodies compared to healthy controls.

Importantly, certain autoantibodies were strongly linked to better clinical outcomes, indicating their potential role in enhancing the effectiveness of immunotherapy.

For example, autoantibodies that blocked an immune signal called interferon were linked to better anti-tumor effects from checkpoint inhibitors. This finding mirrors other studies showing how too much interferon can exhaust the immune system and then curtail the effects of immunotherapy.

“In some patients, their immune system essentially brewed its own companion drug,” Ring explained. “Their autoantibodies neutralized interferon and that amplified the effect of checkpoint blockade. This finding gives us a clear blueprint for combination therapies that intentionally modulate the interferon pathway for everyone else.”

Not all autoantibodies were beneficial. The team discovered several that were associated with worse outcomes from checkpoint inhibitors, likely because they disrupted critical immune pathways necessary for anti-tumor responses. Finding ways to eliminate or counteract these detrimental autoantibodies could open another promising avenue for enhancing the effectiveness of immunotherapy.

“This is only the beginning,” Ring said. “We’re now extending the search to other cancers and treatments so we can harness — or bypass — autoantibodies to make immunotherapy work for far more patients.”

Reference:

Dai, Y., Aizenbud, L., Qin, K. et al. Humoral determinants of checkpoint immunotherapy. Nature (2025). https://doi.org/10.1038/s41586-025-09188-4

Powered by WPeMatico

Researchers have found in a new study that Total thyroidectomy can be safely performed in obese patients without an increased risk of surgery-related complications, despite the longer operative durations associated with higher BMI.

Obesity is associated with an increased risk of postoperative morbidity. We aimed to analyze the impact of BMI on surgical complications in patients undergoing thyroidectomy. This retrospective study was conducted in a single academic center. A total of 484 patients with open total thyroidectomy were considered eligible. These patients were divided in the non-obese (BMI < 30 kg/m2) and obese (BMI ≥ 30 kg/m2) groups. A 1:2 case matching based on demographic (age and gender) and clinical (benign/malignant disease) variables was performed to generate homogenous study groups. A comparative analysis was carried out to show the differences between the two groups in terms of the occurrence of surgery-related outcomes. Results: After case matching, 193 non-obese and 98 obese patients were included in the final analysis. There was no statistically significant difference in the rate of primary outcomes in the non-obese and obese groups: hypoparathyroidism (transient: 29% versus 21.4%, p = 0.166; permanent: 11.4% versus 15.3%, p = 0.344, respectively) and recurrent laryngeal nerve palsy (transient: 13.9% versus 11.2%, p = 0.498; permanent: 3.1% versus 2.0%, p = 0.594, respectively). A BMI ≥ 30 kg/m2 was associated with a significantly longer operative time (p = 0.018), while other secondary outcomes were not significantly affected by BMI. Despite prolonged operative times in obese patients, total thyroidectomy could be performed safely and without increased risk of surgery-related morbidity, regardless of BMI.

Reference:

Vaghiri, S., Mirheli, J., Prassas, D. et al. The BMI impact on thyroidectomy-related morbidity; a case-matched single institutional analysis. BMC Surg 25, 286 (2025). https://doi.org/10.1186/s12893-025-03018-0

Keywords:

Total, Thyroidectomy, Safe, Obese, Patients, Despite, Longer, Operative, Times, Study, Vaghiri, S., Mirheli, J., Prassas, D, Obesity, Thyroid surgeryBMI, Postoperative complications

Powered by WPeMatico

A new study that examined older and newer medications to treat seizures has found that using some medications during pregnancy is linked to an increased risk of malformations at birth, or birth defects. The study is published July 16, 2025, in Neurology®, the medical journal of the American Academy of Neurology.

“Seizures can lead to falls and other complications during pregnancy, so seizure control for those with epilepsy is crucial to protect the health of both the mother and child,” said study author Sonia Hernandez-Diaz, MD, DrPH, of Harvard T.H. Chan School of Public Health in Boston, Massachusetts. “While some older drugs are known to increase the risk of major malformations, less is known about the safety of newer ‘second generation’ medications. Our study looked at a number of drugs and provides valuable information for health care providers and people who may become pregnant to make more informed decisions about the use of these medications during pregnancy.”

For the study, researchers looked at 7,311 women who were taking an antiseizure medication during the first trimester of pregnancy. They were compared to 1,311 women who did not take antiseizure medications.

Participants completed phone interviews at the start of the study, at seven months pregnant, and within three months after delivery.

Researchers confirmed birth defects through medical records. These included cleft lip, larger-than-normal holes in the heart, neural tube defects where the spinal cord does not develop properly such as spina bifida, missing or underdeveloped limbs and other issues where parts of the body did not form correctly.

They then looked at the risk of these birth defects among infants exposed to specific antiseizure medications taken during the first trimester of pregnancy and compared them to infants exposed to lamotrigine, an antiseizure medication used as the reference group.

Researchers found that the risk of major birth defects varied among medications. Valproate posed the highest risk, with major birth defects occurring in 9% of infants exposed. Phenobarbital followed at 6%. Among newer drugs, birth defects occurred in 5% of those exposed to topiramate, while the group exposed to lamotrigine had malformations in 2% of births.

When compared to those who took lamotrigine, those who took valproate had more than five times the risk of birth defects, those who took phenobarbital had nearly three times the risk, and those taking topiramate had over twice the risk.

“Our results confirm that using valproate, phenobarbital or topiramate during early pregnancy is linked to a higher chance of major birth defects in the infants when compared to lamotrigine,” Hernandez-Diaz said. “On the other hand, our results did not show an increased risk with medications like levetiracetam, oxcarbazepine, gabapentin and zonisamide. For lacosamide and pregabalin, the data wasn’t clear enough to make solid conclusions, so more research is needed.”

A limitation of the study was that participants were enrolled months after conception and in most cases early losses of pregnancy were not assessed for malformations, so the number of malformations overall may have been underestimated.

Reference:

Sonia Hernandez-Diaz, Use of Antiseizure Medications Early in Pregnancy and the Risk of Major Malformations in the Newborn,Neurology, doi/10.1212/WNL.0000000000213959.

Powered by WPeMatico

A new study, published in Nature Medicine, has investigated the potential of specific biomarkers such as tau217, Neurofilament Light (NfL), and Glial Fibrillary Acidic Protein (GFAP) to predict the occurrence of dementia, including Alzheimer’s disease, up to ten years before an actual diagnosis in cognitively healthy older adults living in the community.

Previous research has suggested that these biomarkers could be useful in early dementia diagnostics, but most studies involved individuals who have already sought medical care for cognitive issues, due to cognitive concerns or cognitive symptoms, such as memory difficulties.

A larger, community-based study, was necessary to determine the predictive value of biomarkers in the general population.

Led by researchers from the Aging Research Center of Karolinska Institutet in collaboration with SciLifeLab and KTH Royal Institute of Technology in Stockholm, the study analysed blood biomarkers in more than 2,100 adults aged 60+, who were followed over time to determine if they developed dementia.

At a follow-up ten years later, 17 percent of participants had developed dementia. The accuracy of the biomarkers used in the study was found to be up to 83 percent.

“This is an encouraging result, especially considering the 10-year predictive window between testing and diagnosis. It shows that it is possible to reliably identify individuals who develop dementia and those who will remain healthy,” says Giulia Grande, assistant professor at the Department of Neurobiology, Care Sciences and Society, Karolinska Institutet, and first author of the study.

“Our findings imply that if an individual has low levels of these biomarkers, their risk of developing dementia over the next decade is minimal”, explains Davide Vetrano, associate professor at the same department and the study’s senior author. “This information could offer reassurance to individuals worried about their cognitive health, as it potentially rules out the future development of dementia.”

However, the researchers also observed that these biomarkers had low positive predictive values, meaning elevated biomarker levels alone could not reliably identify individuals who would surely develop dementia within the next ten years. Therefore, the study authors advise against widespread use of these biomarkers as screening tools in the population at this stage.

“These biomarkers are promising, but they are currently not suitable as standalone screening tests to identify dementia risk in the general population,” says Davide Vetrano.

The researchers also noted that a combination of the three most relevant biomarkers – p-tau217 with NfL or GFAP – could improve predictive accuracy.

“Further research is needed to determine how these biomarkers can be effectively used in real-world settings, especially for elderly living in the community or in primary health care services,” says Grande.

“We need to move a step further and see whether the combination of these biomarkers with other clinical, biological or functional information could improve the possibility of these biomarkers to be used as screening tools for the general population”, Grande continues.

Reference:

Grande, G., Valletta, M., Rizzuto, D. et al. Blood-based biomarkers of Alzheimer’s disease and incident dementia in the community. Nat Med (2025). https://doi.org/10.1038/s41591-025-03605-x

Powered by WPeMatico