Smart device uses AI and bioelectronics to speed up wound healing process, reveals study

As a wound heals, it goes through several stages: clotting to stop bleeding, immune system response, scabbing, and scarring.

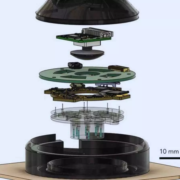

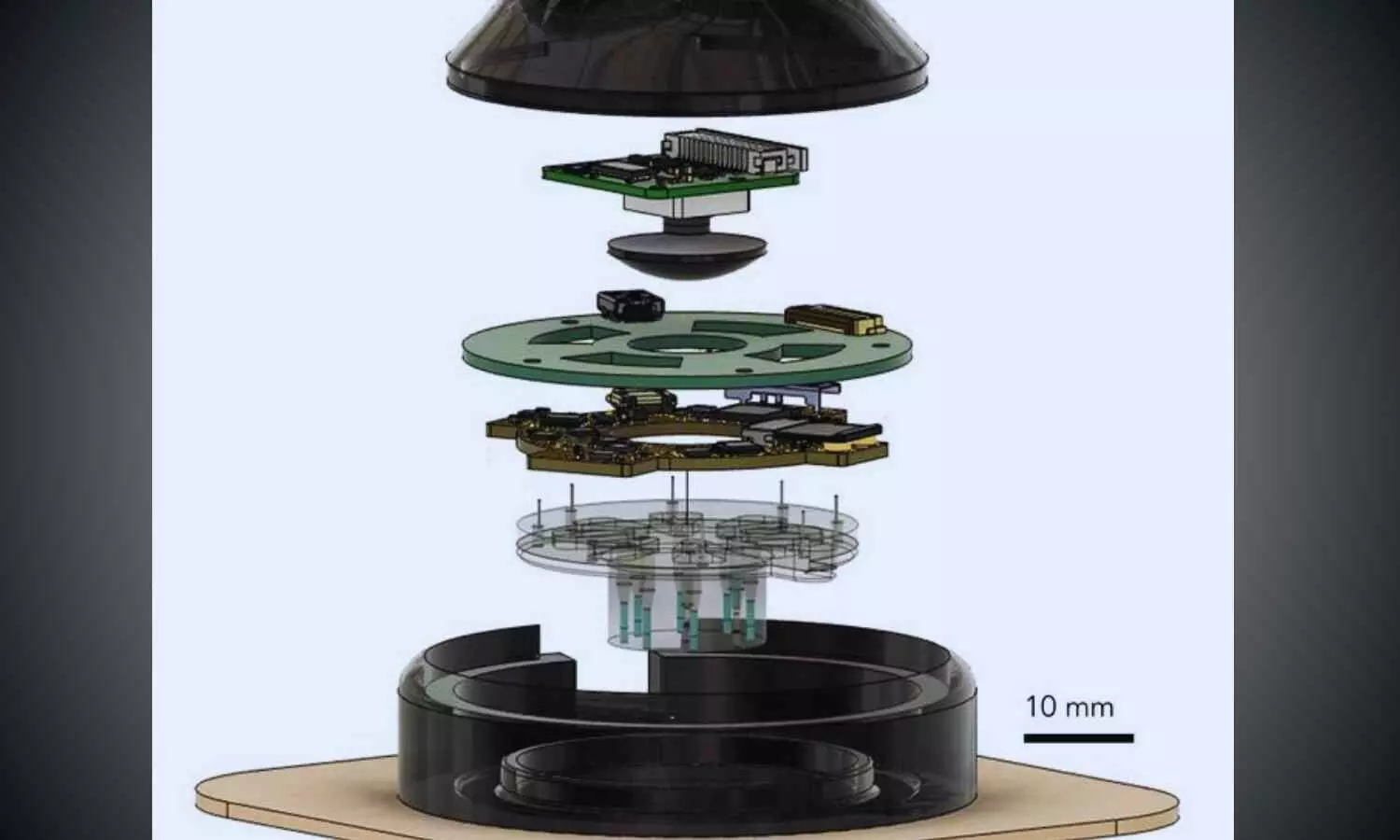

A wearable device called “a-Heal,” designed by engineers at the University of California, Santa Cruz, aims to optimize each stage of the process. The system uses a tiny camera and AI to detect the stage of healing and deliver a treatment in the form of medication or an electric field. The system responds to the unique healing process of the patient, offering personalized treatment.

The portable, wireless device could make wound therapy more accessible to patients in remote areas or with limited mobility. Initial preclinical results, published in the journal npj Biomedical Innovations, show the device successfully speeds up the healing process.

Designing a-Heal

A team of UC Santa Cruz and UC Davis researchers, sponsored by the DARPA-BETR program and led by UC Santa Cruz Baskin Engineering Endowed Chair and Professor of Electrical and Computer Engineering (ECE) Marco Rolandi, designed a device that combines a camera, bioelectronics, and AI for faster wound healing. The integration in one device makes it a “closed-loop system”—one of the firsts of its kind for wound healing as far as the researchers are aware.

“Our system takes all the cues from the body, and with external interventions, it optimizes the healing progress,” Rolandi said.

The device uses an onboard camera, developed by fellow Associate Professor of ECE Mircea Teodorescu and described in a Communications Biology study, to take photos of the wound every two hours. The photos are fed into a machine learning (ML) model, developed by Associate Professor of Applied Mathematics Marcella Gomez, which the researchers call the “AI physician” running on a nearby computer.

“It’s essentially a microscope in a bandage,” Teodorescu said. “Individual images say little, but over time, continuous imaging lets AI spot trends, wound healing stages, flag issues, and suggest treatments.”

The AI physician uses the image to diagnose the wound stage and compares that to where the wound should be along a timeline of optimal wound healing. If the image reveals a lag, the ML model applies a treatment: either medicine, delivered via bioelectronics; or an electric field, which can enhance cell migration toward wound closure.

The treatment topically delivered through the device is fluoxetine, a selective serotonin reuptake inhibitor which controls serotonin levels in the wound and improves healing by decreasing inflammation and increasing wound tissue closure. The dose, determined by preclinical studies by the Isseroff group at UC Davis group to optimize healing, is administered by bioelectronic actuators on the device, developed by Rolandi. An electric field, optimized to improve healing and developed by prior work of the UC Davis’ Min Zhao and Roslyn Rivkah Isseroff, is also delivered through the device.

The AI physician determines the optimal dosage of medication to deliver and the magnitude of the applied electric field. After the therapy has been applied for a certain period of time, the camera takes another image, and the process starts again.

While in use, the device transmits images and data such as healing rate to a secure web interface, so a human physician can intervene manually and fine-tune treatment as needed. The device attaches directly to a commercially available bandage for convenient and secure use.

To assess the potential for clinical use, the UC Davis team tested the device in preclinical wound models. In these studies, wounds treated with a-Heal followed a healing trajectory about 25% faster than standard of care. These findings highlight the promise of the technology not only for accelerating closure of acute wounds, but also for jump-starting stalled healing in chronic wounds.

AI reinforcement

The AI model used for this system, which was led by Assistant Professor of Applied Mathematics Marcella Gomez, uses a reinforcement learning approach, described in a study in the journal Bioengineering, to mimic the diagnostic approach used by physicians.

Reinforcement learning is a technique in which a model is designed to fulfill a specific end goal, learning through trial and error how to best achieve that goal. In this context, the model is given a goal of minimizing time to wound closure, and is rewarded for making progress toward that goal. It continually learns from the patient and adapts its treatment approach.

The reinforcement learning model is guided by an algorithm that Gomez and her students created called Deep Mapper, described in a preprint study, which processes wound images to quantify the stage of healing in comparison to normal progression, mapping it along the trajectory of healing. As time passes with the device on a wound, it learns a linear dynamic model of the past healing and uses that to forecast how the healing will continue to progress.

“It’s not enough to just have the image, you need to process that and put it into context. Then, you can apply the feedback control,” Gomez said.

This technique makes it possible for the algorithm to learn in real-time the impact of the drug or electric field on healing, and guides the reinforcement learning model’s iterative decision making on how to adjust the drug concentration or electric-field strength.

Now, the research team is exploring the potential for this device to improve healing of chronic and infected wounds.

Reference:

Li, H., Yang, Hy., Lu, F. et al. Towards adaptive bioelectronic wound therapy with integrated real-time diagnostics and machine learning–driven closed-loop control. npj Biomed. Innov. 2, 31 (2025). https://doi.org/10.1038/s44385-025-00038-6.

Powered by WPeMatico