Toddlers showed slightly fewer behavioral problems during COVID-19 pandemic, study finds

Powered by WPeMatico

Powered by WPeMatico

Powered by WPeMatico

Powered by WPeMatico

Madrid: Routine coronary computed tomography (CCT)-based follow-up after percutaneous coronary intervention (PCI) of the left main coronary artery did not reduce death, myocardial infarction (MI), unstable angina or stent thrombosis compared with symptom-based follow-up, according to late-breaking research presented in a Hot Line session today at ESC Congress 2025

The left main coronary artery supplies a large proportion of the heart muscle and significant left main coronary artery disease is associated with high morbidity and mortality. The introduction of coronary stents along with the improvements in technology and pharmacological management has increased the use of PCI in these high-risk patients with similar results achieved compared with coronary artery bypass grafting.

“Detrimental complications, such as stent restenosis, and recurrent ischaemic events can occur after left main PCI; however, the optimal surveillance strategy remains a subject of debate,” explained trial presenter, Doctor Ovidio De Filippo from Hospital Citta Della Salute e della Scienza di Torino, Turin, Italy.

“In recent years, CCT has emerged as a valuable tool for diagnosis and monitoring, providing accuracy comparable to invasive angiography, while minimising procedural risks and reducing healthcare costs. We conducted the first randomised trial to evaluate the potential benefit of routine CCT-based follow-up at 6 months compared with standard symptom- and ischaemia-driven management in patients after PCI for left main disease.”

PULSE was an open-label, blinded-endpoint, investigator-initiated, randomised trial conducted at 15 sites in Europe and South America.

Participants were consecutive patients with critical stenosis undergoing PCI for left main coronary artery disease. Participants were randomised 1:1 to either a CCT-guided follow-up at 6 months (experimental arm) or standard symptom and ischaemia-driven management (control arm). Participants were followed for an additional 12 months (total follow-up 18 months).

In the CCT arm, if significant left main in-stent restenosis was detected, patients underwent invasive coronary angiography followed by target lesion revascularisation if in-stent restenosis was confirmed. If any significant stenosis was detected in a different site, management was conducted according to the current guidelines. In the standard-of-care arm, patients were managed per clinical guidelines and according to each centre’s standard practice. The primary endpoint was a composite of all-cause death, spontaneous MI, unstable angina or definite/probable stent thrombosis at 18 months.

A total of 606 patients were randomised who had a mean age of 69 years and 18% were female. CCT was performed in 89.8% of patients in the experimental arm at a median of 200 days.

A primary-endpoint event occurred in 11.9% of patients in the CCT arm and 12.5% of patients in the control arm at 18 months (hazard ratio [HR] 0.97; 95% confidence interval [CI] 0.76 to 1.23; p=0.80).

There was a reduced risk of spontaneous MI in the CCT arm vs. the control arm (0.9% vs. 4.9%; HR 0.26; 95% CI 0.07 to 0.91; p=0.004). An increase in imaging-triggered target-lesion revascularisation was observed in the CCT arm compared with the control arm (4.9% vs. 0.3%; HR 7.7; 95% CI 1.70 to 33.7; p=0.001); however, the incidence of clinically driven target-lesion revascularisation was similar between the arms (5.3% vs. 7.2%; HR 0.74; 95% CI 0.38 to 1.41; p=0.32).

Summing up the main findings, Principal Investigator, Professor Fabrizio D’Ascenzo, also from Hospital Citta Della Salute e della Scienza di Torino, said: “Systematic 6-month CCT-based follow-up did not result in a reduction in 18-month all-cause death, spontaneous MI, unstable angina and stent thrombosis. While universal CCT-based follow-up may not be useful, the marked reduction in spontaneous MI and identification of obstructive lesions requiring repeat PCI suggest this approach may be worth investigating further in selected patients with complex anatomies and over longer follow-up.”

Powered by WPeMatico

Pulsed field ablation did not have superior efficacy to radiofrequency ablation in patients with drug-resistant paroxysmal (intermittent) atrial fibrillation, according to results from a late-breaking trial presented in a Hot Line session today at ESC Congress 2025.

Atrial fibrillation (AF) is the most common sustained cardiac arrhythmia. Patients whose AF is not controlled by antiarrhythmic drugs may undergo catheter ablation to disrupt the abnormal electrical pathways that cause the arrhythmia.

Principal Investigator, Professor Pierre Jaïs from the IHU LIRYC (L’Institut de Rythmologie et Modélisation Cardiaque), Bordeaux, France, explained why the trial was carried out: “Pulmonary vein isolation using thermal radiofrequency-based ablation (RFA) is a widely accepted and established treatment for antiarrhythmic drug-resistant AF. However, pulmonary vein isolation has evolved with the introduction of pulsed field ablation (PFA), which is a faster, more straightforward nonthermal procedure that potentially offers more selective tissue targeting than thermal energy sources. Other trials have compared PFA with thermal energy sources with inconclusive results.2,3 We conducted the BEAT-PAROX-AF trial to directly compare PFA with advanced RFA in patients with antiarrhythmic drug-resistant symptomatic paroxysmal AF.”

BEAT-PAROX-AF was an open-label, randomised controlled superiority trial conducted at nine high-volume centres across France, Czechia, Germany, Austria and Belgium. Eligible patients were aged 18–80 years with symptomatic paroxysmal AF that was resistant to at least one antiarrhythmic drug, with a Class I or IIa indication for AF ablation according to ESC Guidelines and effective oral anticoagulation for >3 weeks prior to the planned procedure. Patients were randomised 1:1 to pulmonary vein isolation using either single-shot PFA or point-by-point RFA following the CLOSE protocol. The primary endpoint was the single-procedure success rate after 12 months, defined as the absence of ≥30-second atrial arrhythmia recurrence, cardioversion, Class I/III antiarrhythmic drug resumption after a 2-month blanking period or any repeat ablation. For follow-up, participants were instructed to perform weekly self-recorded single-lead ECGs and to capture recordings during symptomatic episodes using a mobile ECG system.

A total of 289 patients were analysed who had a mean age of 63.5 years and 42% were female. The mean duration of drug-resistant AF was 39 months.

The primary endpoint, single-procedure success at 12 months, was high and similar between the procedure types: 77.2% in the PFA group and 77.6% in the RFA group, with an adjusted difference of 0.9% (95% confidence interval [CI] –8.2 to 10.1; p=0.84).

The mean total procedure duration was significantly shorter for PFA (56 vs. 95 minutes), with an adjusted difference of −39 minutes (95% CI −44 to −34).

Overall, the safety profile was excellent in both groups. Procedure-related serious adverse events Including unplanned or prolonged hospitalisations occurred in 5 patients (3.4%) in the PFA group and 11 patients (7.6%) in the RFA group. Complications appeared more frequent with RFA. One transient ischaemic attack was observed with PFA, while two tamponade percutaneously drained and two cases of pulmonary vein stenosis >70% were observed with RFA. Pulmonary vein stenosis >50% occurred in 12 patients and 15 patients, respectively. No deaths, persistent phrenic palsy or stroke occurred.

Professor Jaïs concluded: “Both PFA and RFA using the CLOSE protocol showed excellent and similar efficacy. Single-procedure success rates were comparable, although there appeared to be fewer complications and a shorter procedure time with PFA.”

Powered by WPeMatico

Self-harm in young people is a major public health concern, rates are rising, and the adolescent years presents a critical period of intervention. Another modern challenge facing adolescents is sleep deficiency, with global reductions in total sleep time and inconsistent sleep patterns, and as many as 70% of teenagers getting inadequate sleep.

Published today in the Journal of Child Psychology and Psychiatry, researchers at The University of Warwick and University of Birmingham have investigated this relationship between multiple measures of sleep problems and self-harm, using data from over 10,000 teenagers from the Millenium Cohort.

10,000 teenagers, aged 14, were asked about their sleep problems including how long they slept on school days, how long it took to get to sleep, and how often they awoke during the night. They were also asked whether they had self-harmed at 14, a question they were asked again three years later when surveyed at 17 years old.

Michaela Pawley, PhD Candidate, Department of Psychology, University of Warwick, and first author said: “Using large scale data like this really allows you to explore longitudinal relationships at a population level. In this analysis, we discovered that shorter sleep on school days, longer time to fall asleep and more frequent night awakenings at age 14 associated with self-harm concurrently and 3 years later at age 17.”

“While this is clearly an unfavourable relationship, one positive from this research is that sleep is a modifiable risk factor – we can actually do something about it. If the link between sleep and self-harm holds true and with well-placed interventions in schools and homes, there is a lot we can do to turn the tide.”

The researchers found that sleep problems at age 14 were directly associated with self-harm behaviour at age 14 and again at age 17, showing that teenage sleep can have long lasting impacts on self-harm, and could be an avenue to support teenagers at risk.

Sleep problems contributed to risk, even when accounting for other factors that have shown to influence self-harm such as age, sex, socio-economic status, previous instances of self-harm, self-esteem and, importantly, levels of depression. Importantly, only sleep was consistently significant when looking cross-sectionally (age 14) and longitudinally (age 17).

Senior author Professor Nicole Tang, Director of Warwick Sleep and Pain Lab at The University of Warwick added: “Self-harm is one of the leading causes of death among adolescents and young adults. It is a sobering topic. Knowing that poor and fragmented sleep is often a marker preceding or co-occurring with suicidal thoughts and behaviour, it gives us a useful focus for risk monitoring and early prevention.”

The researchers were interested in what could explain this relationship and tested the idea that poor sleep is linked with poorer decision making, which increases your risk of self-harm behaviour. This turned out to not be the case, leaving an open question as to how poor sleep is associated with risk of self-harm.

Regardless, because adolescence is a critical period of vulnerability and potential prevention for self-harm, this study emphasises that sleep health needs to be prioritised in adolescents. Doing so could have long lasting protective effects.

Reference:

Michaela Pawley, Isabel Morales-Muñoz, Andrew P Bagshaw, Nicole K Y Tang, The longitudinal role of sleep on self-harm during adolescence: A birth cohort study, Journal of Child Psychology and Psychiatry, https://doi.org/10.1111/jcpp.70018.

Powered by WPeMatico

Belgium: The European Society of Cardiology (ESC), endorsed by the European Society of Gynecology (ESG), has published its “2025 ESC Guidelines for the management of cardiovascular disease and pregnancy in the European Heart Journal.” Updating the 2018 version, these critical guidelines integrate new evidence to address cardiovascular disease (CVD) as a primary cause of maternal mortality and morbidity, offering refined strategies for pregnant women with cardiac conditions.

Powered by WPeMatico

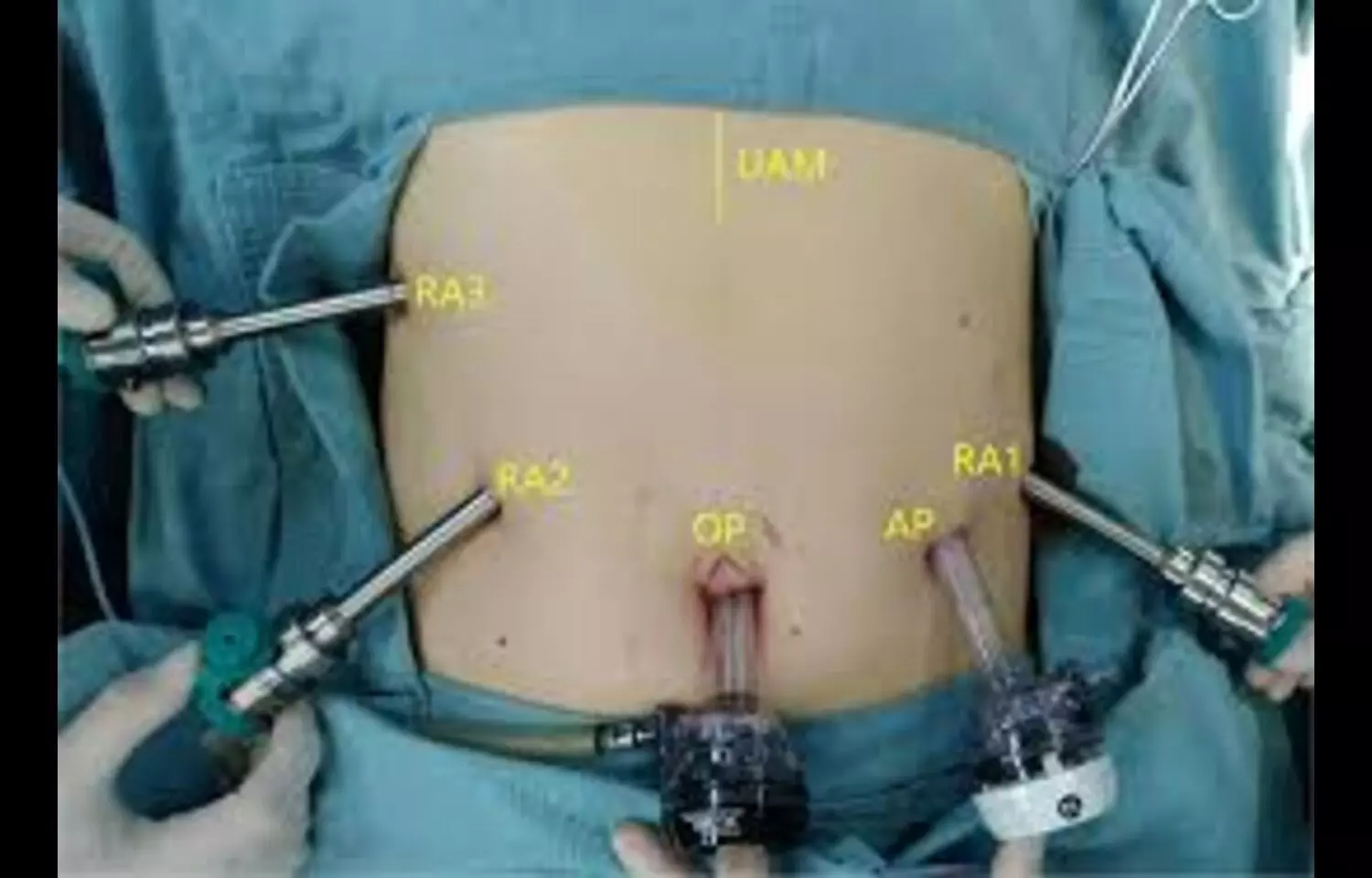

Pancreatoduodenectomy, also known as the Whipple procedure, is a complex surgical treatment for malignant and benign tumors of the pancreatic head and periampullary region. One of the most technically challenging aspects of this operation is dissection of the uncinate process, particularly when tumors are large and located adjacent to major vessels such as the superior mesenteric artery (SMA). With the advent of minimally invasive and robotic approaches, optimizing surgical strategies to enhance safety and oncologic outcomes has become a key focus in hepatopancreatobiliary surgery.

A new study has found that a mesenteric route, SMA-first approach facilitates precise uncinate process dissection and may represent a safe, feasible option for selected patients undergoing robot-assisted pancreatoduodenectomy. The approach was particularly beneficial in nonobese individuals with large pancreatic head tumors in close proximity to the SMA and portal vein, where conventional dissection can be more technically demanding. In the study, patients undergoing the mesenteric route SMA-first approach demonstrated favorable intraoperative and postoperative outcomes. The technique allowed for early identification and control of the SMA, reducing intraoperative blood loss and providing a clearer operative field. Importantly, the approach enabled meticulous clearance of the uncinate margin, improving the potential for R0 resection in oncologic cases. Operative times were comparable to standard techniques, and postoperative complication rates, including pancreatic fistula and delayed gastric emptying, remained within expected ranges. The authors noted that patient selection is critical. The technique may be best suited for nonobese patients, as excessive visceral fat can obscure visualization in the mesenteric window. Additionally, surgeons require advanced robotic expertise and familiarity with vascular dissection to ensure safety. The findings suggest that this modified approach has the potential to expand the role of robotic surgery in complex pancreatic resections, offering a viable alternative for patients with tumors involving the uncinate process.

Keywords: SMA-first approach, mesenteric route, uncinate process dissection, robot-assisted pancreatoduodenectomy, pancreatic head tumors, minimally invasive surgery, vascular involvement, R0 resection, surgical oncology, Annals of Surgical Oncology

Powered by WPeMatico

Israel: An 18-year follow-up of adults living with systemic lupus erythematosus (SLE) has revealed that more than half develop chronic kidney disease (CKD) over time, including a substantial proportion without a history of lupus nephritis (LN).

Powered by WPeMatico

Skin cancer, the most common global malignancy, is linked to ultraviolet (UV)-driven serum 25-hydroxyvitamin D (25(OH)D)synthesis, with its controversial role possibly reflecting cumulative UV exposure. This study aimed to assess the association and causality between 25(OH)D levels and skin cancer risk using the National Health and Nutrition Examination Survey (1999–2018) data and Mendelian randomization (MR) analyses, evaluating 25(OH)D as a screening biomarker.

We integrated data from the National Health and Nutrition Examination Survey (1999–2018; n = 21,357 U.S. adults, including 631 skin cancer cases) with MR analyses using genome-wide association study-derived genetic variants to assess the causal relationship between serum 25(OH)D levels and skin cancer risk.

Higher 25(OH)D levels were associated with increased risks of nonmelanoma skin cancer [odds ratio (OR) (95% confidence interval (CI)) = 2.94 (2.10, 4.20)], melanoma [OR (95% CI) = 2.94 (1.73, 5.28)], and other skin cancers [OR (95% CI) = 2.10 (1.36, 3.36)]. MR analyses supported a causal relationship for nonmelanoma skin cancer [OR (95% CI) = 1.01 (1.00, 1.02)] and melanoma [OR (95% CI) = 1.00 (1.00, 1.01)]. Risks were highest in males, older adults, and individuals with obesity.

People with higher vitamin D levels had nearly 3× the odds of getting skin cancer compared to those with lower levels. Genetic analysis supports a modest causal link—meaning vitamin D might contribute to risk, but the effect is small.Vitamin D is produced in your skin when you’re exposed to UV rays—the primary risk factor for skin cancer. Therefore, high vitamin D probably indicates more sun exposure, which drives the increased risk.

Higher serum 25(OH)D levels are associated with increased skin cancer risk, likely reflecting cumulative UV exposure. Routine monitoring of 25(OH)D, combined with UV exposure management, is recommended for risk stratification in skin cancer screening, particularly among high-risk groups. Validation in multiethnic cohorts is needed to confirm these findings.

Reference:

Meng J, Du R, Li P, Lyu J. Association between Serum 25-Hydroxyvitamin D Levels and Skin Cancer Risk: An Observational Study Based on NHANES and Mendelian Randomization Analysis. Cancer Screen Prev. 2025;4(2):89-97. doi: 10.14218/CSP.2025.00010.

Powered by WPeMatico