Adverse situations experienced by the mother during childhood – such as neglect or physical, psychological or sexual violence – can trigger excessive weight gain in male children as early as the first two months of life. This was shown in a study that followed 352 pairs of newborns and their mothers in the cities of Guarulhos and São Paulo, Brazil. The results were published in the journal Scientific Reports.

The analyses indicated the occurrence of very early metabolic alterations in babies that not only led to weight gain above that expected for their age but also have the potential to increase the future risk of developing obesity and diabetes.

This is the first article resulting from a Thematic Project supported by FAPESP and the National Institutes of Health (NIH) in the United States. Using a database of 580 vulnerable pregnant women, the group is studying intergenerational trauma, i.e., negative effects that can be passed on to future generations, even if the offspring have not lived through such experiences.

Conducted by researchers from Columbia and Duke Universities, both in the United States, and the School of Medicine of the Federal University of São Paulo (EPM-UNIFESP) in Brazil, the study focuses on issues related to mother-baby interaction, development, and mental and physical health.

“We observed that although the babies were born weighing within the expected parameters, in the first few days of life they showed altered weight gain, far above what’s recommended as ideal by the World Health Organization [WHO],” says Andrea Parolin Jackowski, professor at UNIFESP and coordinator of the project in Brazil.

According to the WHO, the ideal weight gain in the first stage of life is up to 30 grams per day. However, the male babies in the study had an average weight gain of 35 grams per day – with some gaining up to 78 grams per day.

“The babies who took part in the study were born full-term, healthy and within the ideal weight range. All of the pregnancies we followed were low-risk, but our data showed that every adversity the mother experienced during childhood increased the babies’ weight gain by 1.8 grams per day. And this was limited to males,” the researcher reports.

According to Jackowski, there are many factors that can influence a baby’s weight in early life, and maternal childhood trauma appears to be one of them. For this reason, the analysis took care to control for so-called confounders – variables related to the mothers’ stress levels that could influence the results. Some examples include lifetime trauma experiences (the effects of which are cumulative) and current trauma, as well as education level and socioeconomic status.

“It’s also important to note that 70% of the babies who took part in the study were exclusively breastfed. The other 30% were on mixed feeding [a combination of breast milk and formula]. This means that they weren’t eating filled cookies or other foods that could actually change their weight. Therefore, the results suggest the occurrence of an early metabolic alteration in these babies,” she says.

Why only boys?

According to the researcher, maternal trauma during childhood only had an impact on the weight of male babies because of physiological variations in the placenta associated with the sex of the fetus.

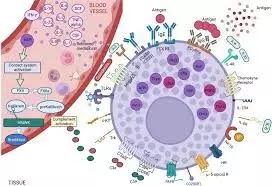

The placenta is a temporary organ composed of maternal and fetal tissue that shows structural differences and differences in the regulation and expression of steroids and proteins depending on the sex of the baby. “Male fetuses develop strategies to maintain constant growth in the face of an adverse intrauterine environment, leading to a greater risk of prematurity and fetal death,” explains the researcher.

In addition, she adds, childhood adversity is known to increase the risk of depression and anxiety during pregnancy, which can lead to increased levels of pro-inflammatory cytokines and cortisol in the intrauterine environment. “It appears that the placenta of female fetuses adapts to protect them, slowing down the growth rate without restricting intrauterine growth [i.e., the size of the baby is within the expected range at the end of pregnancy] and allowing for a higher survival rate,” she explains.

Another important issue is that the placenta of male fetuses tends to be more susceptible to fluctuations in substances and metabolites present in the maternal bloodstream compared to female placentas. “As a result, in these cases of trauma, it can become more permeable, causing the male fetus to be more exposed to inflammatory factors resulting from high levels of stress, such as cortisol and interleukins, for example.”

The work now published is the first to identify intergenerational trauma as a trigger for physical changes at such an early age. “It’s already known that adverse events in the mother’s childhood can trigger psychological and developmental problems, but our study is pioneering in showing that they can affect physical problems, such as weight gain, as early as the first two months of life,” says Jackowski.

Now, the research team, which includes Vinicius O. Santana and FAPESP postdoctoral fellow Aline C. Ramos, will follow the weight development of the children of mothers who suffered adversity in childhood until they are 24 months old. “We’re going to follow them for longer because we want to investigate the impact of the introduction of food, which usually occurs at 6 months of age,” she says.

As the researchers explain, the research suggests that metabolic changes can be modified. “It’s not a matter of determinism. We need to monitor how the metabolism and inflammatory factors behave in these babies over a longer period of time to understand how to modulate this process. It’s important to know that all of this is modifiable, and we’re now going to look at how we can intervene,” she says.

Reference:

Santana, V.O., Ramos, A.C., Cogo-Moreira, H. et al. Sex-specific association between maternal childhood adversities and offspring’s weight gain in a Brazilian cohort. Sci Rep 15, 2960 (2025). https://doi.org/10.1038/s41598-025-87078-5.